I’d touched on this on the modwiggler topic, but it got a buried (understandably).

I write a lot of software (both dsp and drivers etc) around expressive controllers, using various protocols including MPE, and recently also within vcv.

Im interested in how I could work in this area by creating plugins for MM.

I wanted to talk a little what support exists in MM, a little focused on the technical side.

my assumption is that all (usb) IO is done via the M4 processor leaving the A7 for DSP.

this would mean that the midi comes in on the M4 and is then shipped over (I had a look at gh, I think there is a packet protocol between chips) to the A7.

k, so lets consider a solid example…

as there is not polyphonic cable support in MM.

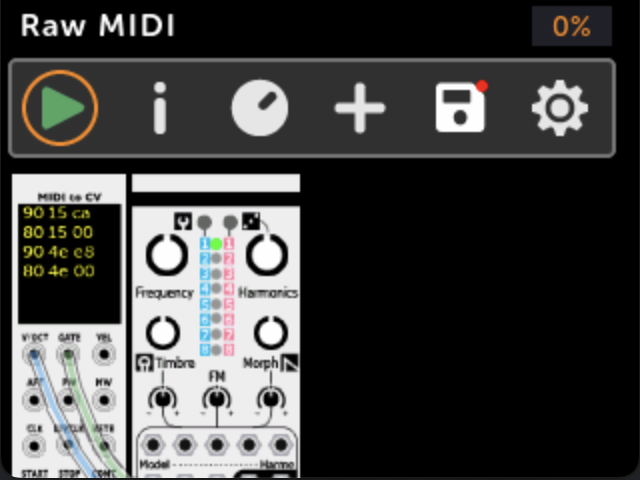

what if I wanted to write a polyphonic module that processed a midi stream, so that I can see multi channel (mpe) midi. the module would then be a polyphonic voice, which Id likely ‘mix down’ to (e.g.) a stereo output.

so this raises the question…

can a module get access to the full midi stream?

obviously, this could not be done via a ‘jack input’ since there is no polyphonic cable support.

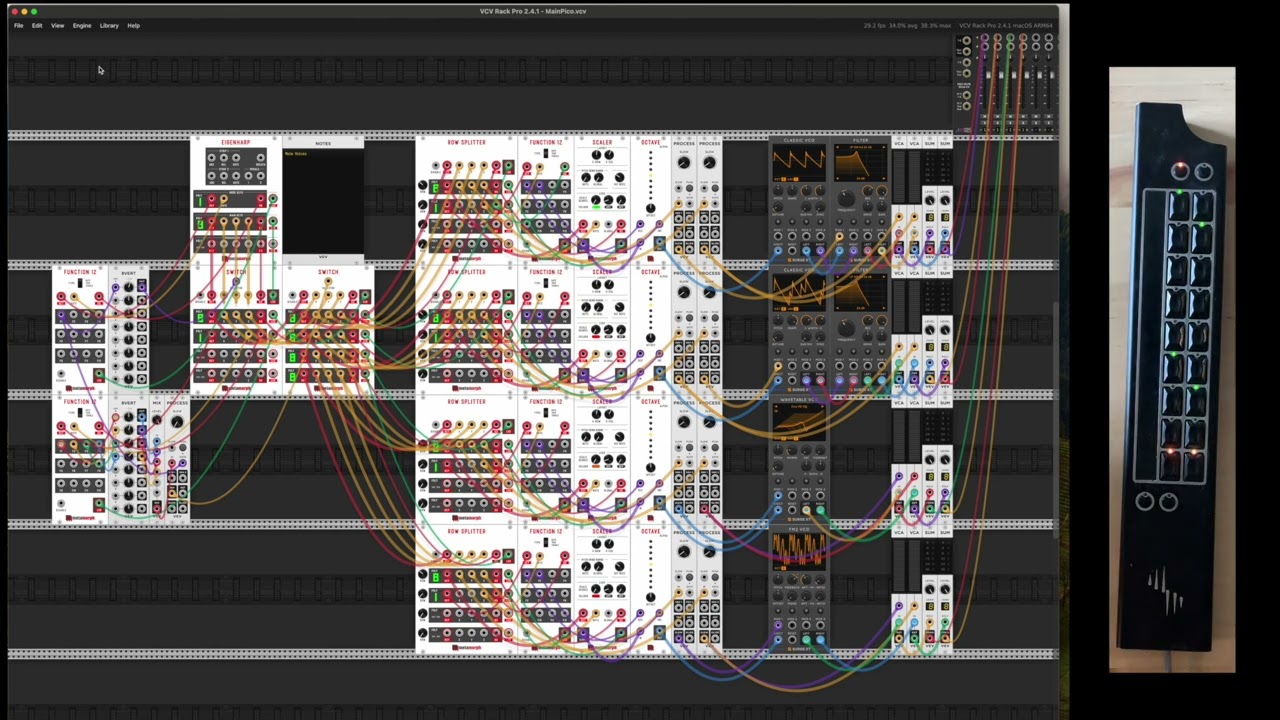

here’s a little video of what Ive done connecting the Eigenharps to vcv ![]()

not really feasible I suspect on MM, as id guess Id need to write custom firmware for the M4 to support the usb protocol for the eigenharp… the the lack of poly cables in MM would be problematic.

but does show the kind of thing, Im interested in - even if I approach in a different way.

btw: Im also interested in i2c support in this area, but thats a question for another day ![]()